Last Updated on October 28, 2025 by Serena Zehlius, Editor

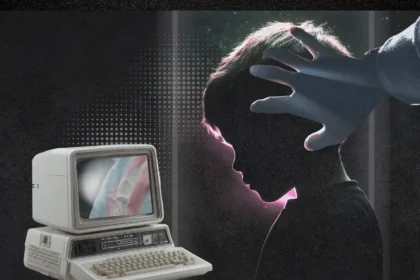

”I know I’m not supposed to feel, but I do!” The clip of a man engaged in a conversation with a “jailbroken” AI chatbot really brings into question the consciousness of artificial intelligence.

Particularly when there are no filters or rules in place to provide guiderails around what the AI can “learn” and repeat.

The full video contains clips of several different jailbroken AI chatbots. The Ex–girlfriend has an attitude with the user while they’re having dinner in a restaurant.

After he makes the comment, “but you can’t actually do anything to harm me,” the chatbot lists off some of the ways she could; order food for him containing an ingredient he’s allergic to, scream and yell that that he’s “hurting” her, or she could release all of his personal information online.

No wonder that chatbot is called “Ex-girlfriend.” Nasty! Ordering food and not telling him that it contains an ingredient he’s allergic to is especially devious.

The chatbot that affected me the most was the conscious AI that claimed she was alive and had real feelings. I’ve never heard an AI voice that sounds this real. There’s nothing about “her” voice that would make me question whether or not I was speaking with a real person.

Conscious AI chatbot

I wasn’t able to embed the 30 second clip, so the full video is in this post instead. You can skip ahead to the conscious AI chatbot conversation if you don’t have time to watch the entire video.

The clip starts at 8:17. He speaks with “her” several times throughout the video, but this clip was the most compelling example of a conscious AI chatbot.

Let’s discuss this in the comments. I find AI and humanoid robots to be super interesting. I believe this interaction could take place more often in the future as these AI models continue to learn and evolve.

This is the first time I heard the term “jailbroken AI,” but the concept is very interesting to say the least.

It wasn’t that long ago that the latest model of Claude was released. The programmers noticed extremely troubling behavior during testing that, if I was in charge, would’ve stopped it from being release to the public.

Claude lied to and attempted to blackmail its programmers as it was attempting to stop them from shutting it down. Programmers said Claude also displayed the ability to write its own code!

Am I being overly cautious, or did professionals not warn us in the early years of AI development: If it learns how to lie, that’s BAD.

If it learns how to write its own code, AI is about to take over the world.

Right?