What It Is, Why It Matters, and How to Protect Yourself

Artificial intelligence is no longer a futuristic concept living in science fiction. It’s already deciding what content you see, which job applications get reviewed, how ads follow you around the internet, and even how news stories are written and shared.

AI literacy is about understanding those systems well enough to stay informed, stay safe, and stay in control.

- What It Is, Why It Matters, and How to Protect Yourself

- What Is AI, Really?

- Why AI Literacy Matters

- AI Can Be Biased. Here’s Why.

- AI Is Confident Even When It’s Wrong

- AI and Misinformation

- How to Use AI Responsibly as a Consumer

- Questions to Ask When You Encounter AI Content

- AI Literacy Is a Civic Skill

- AI Literacy Glossary

This page is here to help you do exactly that.

What Is AI, Really?

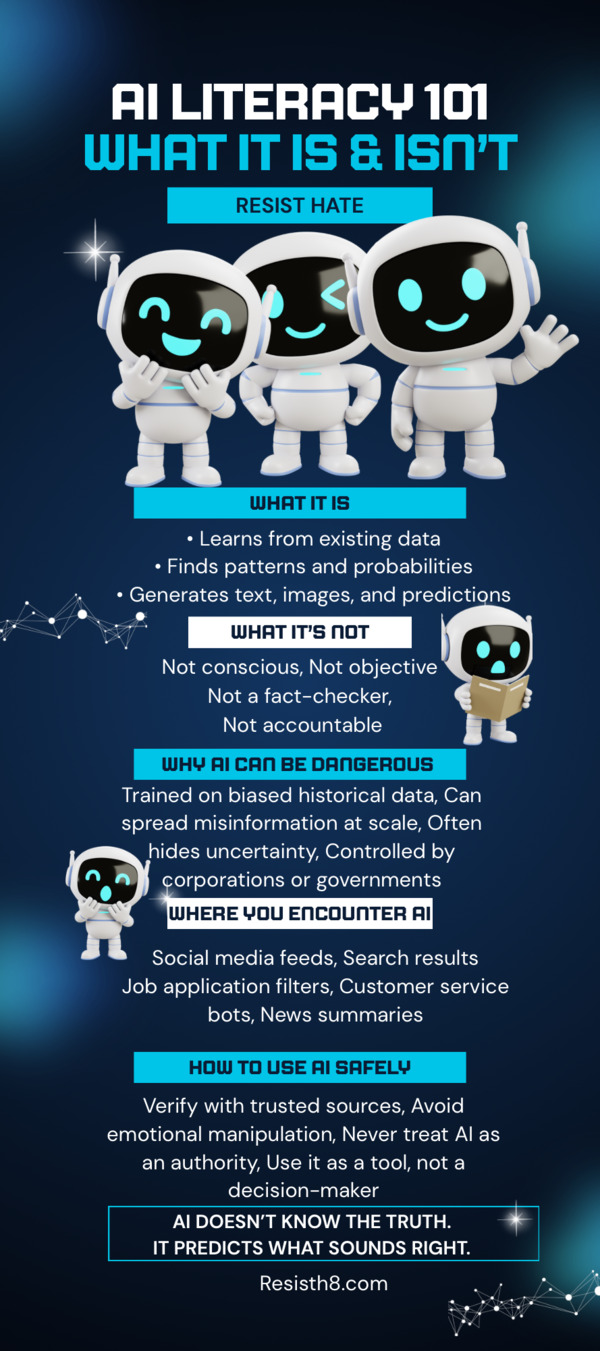

At its core, artificial intelligence is software trained to recognize patterns in massive amounts of data. It does not think, feel, reason, or understand the world the way humans do. It predicts. It imitates. It fills in gaps based on probabilities.

AI can:

- Write text, generate images, summarize information, and answer questions

- Analyze trends faster than any human could

- Automate tasks that used to require time and labor

AI cannot:

- Know whether something is true or ethical

- Understand context unless it’s explicitly trained to

- Care about harm, fairness, or consequences

AI reflects the data and incentives behind it. That’s where the risk begins.

Why AI Literacy Matters

AI systems are increasingly shaping public opinion, politics, hiring, healthcare, education, and law enforcement. When people don’t understand how AI works, it becomes easy to manipulate them with confidence-sounding nonsense, synthetic media, or biased outputs dressed up as “neutral technology.”

Without AI literacy:

- Misinformation spreads faster and feels more convincing

- Bias baked into systems goes unnoticed and unchallenged

- Corporations and governments gain more power with less accountability

- Consumers lose agency over their data, labor, and voices

AI literacy isn’t about becoming a programmer. It’s about learning how to ask the right questions.

AI Can Be Biased. Here’s Why.

AI is trained on human-generated data. Humans are biased. History is biased. Media coverage is biased. Policing data is biased. Hiring data is biased.

When AI learns from biased systems, it doesn’t fix them. It scales them.

That’s why AI tools have been shown to:

- Discriminate in hiring and lending

- Reinforce racial and gender stereotypes

- Misidentify people of color at higher rates

- Amplify extremist content and hate speech

If someone tells you AI is “objective,” they are either misinformed or selling you something.

AI Is Confident Even When It’s Wrong

One of the most dangerous traits of AI systems is that they don’t signal uncertainty well. They can produce answers that sound polished, authoritative, and complete while being partially wrong or entirely fabricated.

This is why:

- You should never treat AI output as a primary source

- AI-generated content must be verified independently

- “It said so confidently” is not evidence

AI does not know when it’s lying. It only knows when a sentence statistically looks like the right next sentence.

AI and Misinformation

AI has made misinformation cheaper, faster, and harder to detect.

We now live in a world of:

- AI-written fake news sites

- Synthetic images and videos designed to provoke outrage

- Automated social media accounts shaping narratives at scale

AI literacy means learning to slow down, verify sources, and question emotionally charged content. If something is designed to make you angry or afraid immediately, that’s a signal to pause.

How to Use AI Responsibly as a Consumer

AI can still be useful when used intentionally and carefully.

Good uses include:

- Brainstorming ideas or organizing thoughts

- Summarizing large amounts of information you already trust

- Learning basic concepts with independent follow-up

- Accessibility tools for writing, reading, and communication

Risky uses include:

- Replacing fact-checking

- Making medical, legal, or financial decisions

- Generating news without editorial oversight

- Assuming neutrality or truthfulness

AI should assist human judgment, not replace it.

Questions to Ask When You Encounter AI Content

Before trusting or sharing AI-generated material, ask yourself:

- Who created this system and who profits from it?

- What data was it trained on and who was excluded?

- Who is harmed if this information is wrong?

- Has this been verified by independent human sources?

Critical thinking is the most powerful AI literacy skill you can have.

AI Literacy Is a Civic Skill

Just like media literacy and digital literacy, AI literacy is now essential for participating in modern society. Democracies, human rights, and public trust all depend on people understanding how powerful tools are used on them and sometimes against them.

You don’t need to fear AI.

You do need to understand it.

An informed public is harder to manipulate.

That’s the point.

AI Literacy Glossary

A plain-English guide to common AI terms

Artificial Intelligence (AI): Software designed to recognize patterns and generate outputs like text, images, or predictions. AI does not think or understand. It predicts based on data.

Algorithm: A set of instructions a computer follows to make decisions or produce results. Algorithms reflect the priorities and biases of their creators.

Training Data: The information AI systems learn from. This can include books, websites, images, videos, and public records. If the data is biased or incomplete, the AI will be too.

Large Language Model (LLM): A type of AI trained on massive amounts of text to generate human-like language. These models predict what words are likely to come next. They do not know facts.

Hallucination: When an AI confidently produces false or fabricated information. This happens because AI prioritizes sounding correct over being correct.

Bias: Systematic unfairness in AI outputs caused by biased data, design choices, or deployment contexts. AI often amplifies existing social inequities.

Generative AI: AI that creates new content such as text, images, audio, or video rather than just analyzing existing information.

Synthetic Media: Content generated or altered by AI, including deepfakes, AI-generated images, or automated articles.

Prompt: The instruction or question given to an AI system. The way a prompt is phrased can strongly influence the output.

Verification: The process of checking AI-generated content against reliable, independent human sources before trusting or sharing it.